I've searched high and low and I cannot find any definitive answer or anyone that has seen this exact same problem. I need to find a real fix but I'm at a loss.

#Typical cisco mac address mac#

Obviously I can't keep changing MAC addresses. But now the issue is spreading to other VMs that previously never exhibited the issue! Usually it's the same exact VMs that continue to be re-affected. Sometimes it's the source, sometimes its the destination, other times it requires BOTH to be changed. Most often I have to change the actual MAC but it seems random as to which machine changing the MAC actually works on. In fact, in one scenario, a simple clearing of the ARP table of the destination VM fixed the issue, but that's only worked one time. So the issue is clearly not with the MAC itself, but something that relies upon it. I found that even if I immediately reverted that new MAC back to the original MAC assigned by VMware, the connectivity remained.

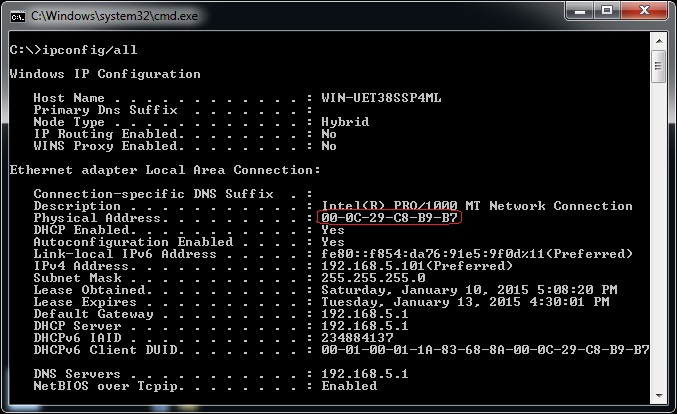

I generated a random mac address, and applied it, connectivity was again restored. The next time it occurred, I found that if I simply change the MAC within the advanced properties of the NIC within the OS, I could achieve the same success. I added a new vNIC to the system (to ensure it got a new MAC from VMware), migrated the IP over to it, and deleted the old vNIC, and magically connectivity was IMMEDIATELY restored. At one point, grasping at straws, I suspected a potential MAC address problem. I finally found something that did resolve the issue, albeit temporarily. I mean that alone rules out most of our environment as a potential culprit I would think. Even in this last scenario and when VMs are on the same subnet they STILL could not talk to each other. I've even migrated the VMs to the SAME ESXi host and even same vmnic. I've migrated them across different ESXi hosts and/or vmnics. Each of the following failed to resolve anything. I've performed a number of troubleshooting steps over the past few months to isolate the problem with little success. The specified network name is no longer available.

#Typical cisco mac address windows#

However attempts to access an actual file share leads to the typical old school Windows error "network name is no longer available". When this is occurring, I can ping back and forth between the systems and telnet sessions to 1433 and 445 connect fine. So far the symptoms are SQL timeouts (references to semaphore timeouts) or file shares inaccessible.

Often these VMs are on the same subnet as each other, other times they cross subnets. Some cases, the destination VM is a 2012 R2 VM, but it always seems to only affect these 2016/2019 source machines. anything relying on SQL connectivity or file share access from another VM). Basically services stop functioning (e.g. Ever since installing Windows 20 VMs into my VMware ESXi 6.7/6.5 environment, we've been having random connectivity issues with a number of these VMs.

0 kommentar(er)

0 kommentar(er)